In today’s digital-first economy, the infrastructure that powers online operations is no longer just a technical consideration—it’s a strategic business asset. As organizations navigate increasingly complex technological landscapes, selecting the right dedicated server hosting solution has become crucial for maintaining a competitive advantage, operational efficiency, and a robust security posture.

The Evolution of Server Hosting Solutions

Server hosting has undergone a remarkable transformation since the early days of the internet. Initially, physical servers housed within organization premises were the norm, requiring significant capital investment and specialized IT personnel. As internet adoption accelerated in the late 1990s and early 2000s, shared hosting emerged as a cost-effective alternative, allowing multiple businesses to utilize resources on a single server.

The subsequent decade witnessed the rise of virtualization technologies, leading to the cloud computing revolution that promised virtually unlimited scalability and pay-as-you-go economics. Yet, amidst this evolution, dedicated server hosting has not only persisted but strengthened its position as the solution of choice for organizations with specific performance, security, and compliance requirements.

What Defines True Dedicated Server Hosting

Dedicated server hosting represents the pinnacle of exclusivity in the hosting hierarchy. Unlike shared or cloud environments, where resources are distributed among multiple clients, a dedicated server provides an entire physical machine exclusively to a single client. This means every component—from processing power to memory, storage, and network bandwidth—is committed solely to supporting one organization’s digital operations.

This exclusivity translates to complete control over hardware specifications, operating system choices, security configurations, and resource allocation. True dedicated server hosting ensures that no other tenant can impact your performance, compromise your security, or access your data, creating a controlled environment ideally suited for mission-critical applications.

Who Needs Dedicated Server Hosting in Today’s Digital Landscape

While cloud computing dominates contemporary IT conversations, dedicated server hosting remains essential for specific use cases and organizations. These typically include:

- Enterprise organizations with high-performance computing needs or applications that demand consistent, predictable performance

- Financial institutions and healthcare providers facing strict regulatory compliance requirements that necessitate complete infrastructure control

- E-commerce platforms experiencing high traffic volumes that require guaranteed resources, especially during peak seasons

- Gaming companies and streaming services where latency and performance directly impact customer experience

- Data-intensive operations such as big data analytics, machine learning workflows, or large database applications

- Organizations with specialized security requirements that demand physical isolation of computing resources

As we delve deeper into this guide, we’ll explore how dedicated server hosting provides these organizations with the foundation needed to deliver reliable, secure, and high-performing digital experiences.

Understanding Dedicated Server Hosting Fundamentals

Before investing in dedicated server infrastructure, it’s essential to understand how these solutions differ from alternatives and what technical components contribute to their superior performance profile.

Dedicated Server vs. Shared vs. Cloud Hosting: Critical Differences

The hosting landscape offers three primary paradigms, each with distinct characteristics:

Shared Hosting:

- Multiple websites and applications share a single server’s resources

- Cost-effective entry point for small websites with minimal traffic

- Limited performance capabilities due to resource contention

- Minimal configuration control and customization options

Cloud Hosting:

- Virtualized resources distributed across multiple physical machines

- Highly scalable with the ability to increase or decrease resources on demand

- Pay-for-what-you-use billing models

- Potential performance variability due to underlying infrastructure sharing

- Abstracted from physical hardware with standardized configurations

Dedicated Server Hosting:

- Exclusive use of an entire physical server

- Maximum performance with no resource competition

- Complete customization of hardware and software configurations

- Predictable performance with guaranteed resources

- Enhanced security through physical isolation

- Fixed capacity requiring upfront planning for scaling needs

- Greater control over compliance and regulatory requirements

The choice between these options ultimately depends on specific business requirements, budget constraints, and technical needs. Organizations frequently implement hybrid strategies combining multiple hosting types.

The Technical Architecture of Dedicated Servers

A dedicated server’s architecture consists of several interconnected components working harmoniously to deliver computing services:

- Physical Hardware: The tangible components housed in a data center rack, including the server chassis, power supplies, and cooling systems.

- Processor (CPU): The computational brain of the dedicated server, with enterprise-grade servers typically featuring multi-core processors from Intel Xeon or AMD EPYC families.

- Memory (RAM): High-speed, temporary storage that allows the server to access data quickly, with enterprise servers commonly equipped with ECC (Error-Correcting Code) memory for improved reliability.

- Storage Subsystem: Persistent data storage including traditional hard disk drives (HDDs), solid-state drives (SSDs), or high-performance NVMe drives, often configured in RAID arrays for redundancy.

- Network Interface: Hardware components (NICs) that connect the server to the data center network, usually offering redundant connections for fault tolerance.

- Operating System: The fundamental software layer that manages hardware resources and provides services to applications, typically either Windows Server or a Linux distribution.

- Hypervisor (Optional): Software that enables dedicated server virtualization, allowing a single physical server to host multiple virtual machines.

- Management Subsystem: Hardware and software components enabling remote server management, including out-of-band management through technologies like IPMI or iDRAC.

This architecture creates a self-contained computing environment designed to deliver consistent performance regardless of external factors.

Key Components That Define Dedicated Server Performance

Several critical components determine the overall performance profile of a dedicated server:

Processor Characteristics:

- Core count: More cores allow for greater parallel processing capabilities

- Clock speed: Higher frequencies enable faster instruction execution

- Cache size: Larger caches improve data access speeds

- Instruction set: Modern extensions like AVX-512 accelerate specific workloads

Memory Configuration:

- Capacity: Determines how much data can be held in fast-access memory

- Speed: Memory frequency affects data transfer rates

- Channels: Multi-channel configurations increase memory bandwidth

- Latency: Lower latency values improve responsiveness

Storage Performance:

- Drive technology: NVMe > SSD > HDD in terms of performance

- RAID configuration: Balances between performance, capacity, and redundancy

- I/O throughput: Measured in IOPS (Input/Output Operations Per Second)

- Connection interface: PCIe generations affect maximum throughput

Network Capabilities:

- Port speed: Typically, 1Gbps, 10Gbps, or higher

- Bandwidth allocation: Monthly data transfer allowances

- Network redundancy: Multiple physical connections for reliability

- IP address allocation: IPv4 and IPv6 address blocks

Understanding these components helps organizations tailor dedicated server specifications to their workload requirements, ensuring optimal performance without unnecessary expenditure.

The Business Case for Dedicated Server Infrastructure

Beyond technical considerations, dedicated servers present compelling business advantages:

- Predictable Performance: Consistent resource availability translates to reliable user experiences, critical for customer-facing applications.

- Enhanced Security Posture: Physical isolation minimizes attack vectors and simplifies compliance with security frameworks like PCI DSS, HIPAA, or GDPR.

- Total Cost Control: Fixed monthly costs without the usage-based surprises sometimes encountered in cloud environments.

- Customization Flexibility: Freedom to implement specialized hardware or software configurations impossible in standardized environments.

- Resource Efficiency: For workloads with steady, predictable resource requirements, dedicated servers often provide better price-performance ratios than equivalent cloud resources.

- Data Sovereignty: Ability to specify exact physical location of data storage, essential for organizations facing regulatory geographic restrictions.

- Performance Optimization: Complete control allows for fine-tuning server configurations to exact workload requirements.

For organizations with stable, performance-sensitive workloads, these benefits often outweigh the flexibility advantages of cloud platforms, particularly when considering the long-term total cost of ownership.

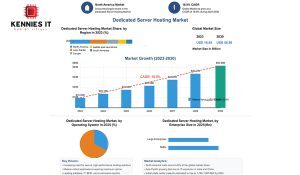

Dedicated Server Market Insights and Analytics

The dedicated server hosting market is witnessing exponential growth, driven by the increasing need for secure, high-performance hosting solutions across industries. Valued at $16.95 billion in 2023, the market is expected to grow at a compound annual growth rate (CAGR) of 18.9%, reaching $56.96 billion by 2030. This growth is fueled by businesses seeking reliable hosting environments for mission-critical applications, where exclusive access to server resources ensures maximum uptime, speed, and security. Industries such as IT, BFSI, and e-commerce are leading adopters of dedicated server hosting, leveraging its ability to handle resource-intensive workloads and safeguard sensitive data.

Market Analytics

- North America holds around 40% of the global market share.

- Asia-Pacific is growing fast due to IT expansion in India and China, as only India generates 20% of global data.

- India’s data center capacity is estimated to rise to between 1,700 and 1,800 MW by 2025.

- Automation and virtualization are improving server efficiency and management.

- Higher costs remain a barrier compared to shared or VPS hosting.

- Managing dedicated servers often requires advanced technical expertise.

How Does Dedicated Servers Hosting Work?

Understanding the operational mechanics of dedicated server hosting helps organizations maximize the value of their infrastructure investment.

At its core, dedicated server hosting provides exclusive access to physical computing resources housed in professional data centers. Unlike virtualized or shared environments, each component of the server—from processors to storage drives—serves only your applications and data.

The typical deployment process follows these steps:

- Specification and Configuration: The client selects server hardware specifications, operating system, and additional services based on workload requirements.

- Provisioning and Setup: The hosting provider prepares the physical server with the requested configuration, installs the chosen operating system, and performs initial security hardening.

- Network Integration: The server receives its IP address allocation and is connected to the data center’s network infrastructure with the specified bandwidth capacity.

- Access Provisioning: Administrative credentials are created and provided to the client, enabling remote management through secure channels.

- Optional Service Implementation: Additional requested services such as backup systems, security measures, or monitoring tools are configured.

- Application Deployment: The client or managed service provider installs and configures the necessary applications and services.

Once operational, traffic flows to the dedicated server through the following path:

- A user request initiates connection to your application or website

- DNS resolution directs the request to your server’s IP address

- The request traverses the internet to reach the data center network

- Data center routers and switches direct the request to your specific server

- Your dedicated server processes the request using its dedicated resources

- The response follows the reverse path back to the user

This direct path, unencumbered by resource competition from other customers, enables consistent performance and predictable user experiences.

For administration, most dedicated servers provide multiple management options:

- Remote Console Access: Secure Shell (SSH) for Linux or Remote Desktop Protocol (RDP) for Windows environments

- Control Panels: Web-based interfaces like cPanel, Plesk, or custom dashboards

- Out-of-Band Management: Hardware-level access through IPMI, iDRAC, or similar technologies for emergency scenarios

- API Integration: Programmatic access for automation and integration with existing management systems

These management channels enable complete control over the dedicated server infrastructure, allowing for custom configurations that precisely match business requirements.

Types of Dedicated Server Hosting Solutions

Types of Dedicated Server Hosting Solutions: A Comprehensive Guide

In today’s digital-first business environment, the foundation of your online presence and application performance lies in your hosting infrastructure. Dedicated server hosting represents the premium tier of hosting solutions, providing exclusive physical server resources to a single client. This dedicated approach eliminates the “noisy neighbor” problem common in shared environments and delivers consistent performance, enhanced security, and complete control. Let’s explore the various types of dedicated server hosting solutions available, their technical specifications, and why they might be the perfect fit for your organization’s unique requirements.

Managed Dedicated Servers: Full-Service Hosting Explained

Managed dedicated servers combine the raw power of exclusive hardware with comprehensive technical support and administration. This solution effectively functions as an extension of your IT department, with seasoned professionals handling everything from initial configuration to ongoing maintenance and troubleshooting.

Why would you need managed dedicated servers?

- Limited technical expertise: Your organization may lack specialized server administrators or DevOps engineers who understand the intricacies of server management, networking configurations, and security hardening.

- Resource allocation strategy: Even with technical capabilities in-house, your team’s talents may be better utilized on core business activities and innovation rather than routine server maintenance.

- Compliance requirements: Regulated industries like healthcare (HIPAA), finance (PCI DSS), or businesses handling European customer data (GDPR) face stringent compliance requirements that demand expert implementation and documentation.

- High-availability demands: Business-critical applications require 24/7 monitoring and immediate response to potential issues, which can be challenging to maintain with an in-house team.

- Security concerns: The ever-evolving threat landscape requires constant vigilance, regular security updates, and sophisticated protection measures best handled by specialists.

Benefits of managed dedicated servers:

- Comprehensive technical support: Access to certified server administrators, network engineers, and security specialists around the clock.

- Proactive monitoring: Advanced monitoring systems track hundreds of server metrics to identify and address potential issues before they impact your business.

- Regular maintenance: Scheduled maintenance windows for critical updates, security patches, and performance optimization without disrupting operations.

- Custom configurations: Expert implementation of specific dedicated server environments tailored to your application requirements, whether it’s specialized web server configurations or database optimization.

- Backup and disaster recovery: Automated backup systems with multiple retention policies and tested disaster recovery procedures.

- Security hardening: Implementation of security best practices including firewall configuration, intrusion detection systems, DDoS protection, and regular security audits.

- Performance optimization: Continuous fine-tuning of server parameters to maximize performance for your specific workloads.

Real-world application: E-commerce platforms experiencing rapid growth often choose managed dedicated servers to ensure seamless scalability and professional handling of security concerns during high-traffic events like Black Friday sales.

Windows Dedicated Servers: When Microsoft Ecosystems Rule

Windows dedicated servers provide the ideal environment for businesses deeply integrated with Microsoft’s technology stack. These servers run Microsoft Windows Server operating systems (2019, 2022) and support the full range of Microsoft applications and services.

Why would you need Windows dedicated servers?

- Application compatibility: Your business relies on Windows-exclusive software or specialized applications built for Microsoft environments that have no Linux equivalent.

- Microsoft development stack: Your development team works primarily with .NET framework, C#, ASP.NET, or other Microsoft development technologies.

- Database requirements: You’re heavily invested in Microsoft SQL Server and need optimized performance without the complexity of running it on alternative operating systems.

- Active Directory integration: Your organization requires centralized user management, permission controls, and policy enforcement through Active Directory.

- Exchange and SharePoint: You need to host Microsoft collaboration tools on-premises or in a private environment for security or compliance reasons.

- Technical familiarity: Your IT team possesses strong Windows server administration skills but limited experience with Linux environments.

Benefits of Windows dedicated servers:

- Familiar interface: Windows Server provides an intuitive graphical user interface and PowerShell for advanced automation, reducing the learning curve for administrators.

- Strong Microsoft ecosystem integration: Seamless interaction with Microsoft services including Azure, Office 365, and Microsoft development tools.

- Remote administration: Built-in Remote Desktop Protocol (RDP) capabilities enable secure, comprehensive remote server management with a full GUI experience.

- Enterprise-grade reliability: Windows Dedicated Server includes high-availability features like failover clustering, network load balancing, and direct storage spaces.

- Extensive support: Microsoft provides regular security updates, technical documentation, and support resources for all supported Windows Server versions.

- Simplified licensing: Consolidated licensing options for Microsoft products and the ability to leverage existing Microsoft enterprise agreements.

- Hybrid cloud capabilities: Native integration with Azure services for extending on-premises capabilities to the cloud when needed.

Real-world application: Financial services companies often deploy Windows dedicated servers for their trading platforms and CRM systems due to the robust security features, comprehensive audit capabilities, and compatibility with industry-specific software built for Windows environments.

Linux Dedicated Servers: Open-Source Power and Flexibility

Linux dedicated servers represent the backbone of internet infrastructure, powering everything from small websites to massive cloud platforms. These open-source operating systems offer unmatched flexibility, stellar performance, and cost-effectiveness for businesses of all sizes.

Why would you need Linux dedicated servers?

- Performance optimization: Linux systems typically consume fewer resources than Windows counterparts, allowing more processing power for your applications.

- Custom environment requirements: You need fine-grained control over every aspect of your server configuration, from kernel parameters to specialized software stacks.

- Web hosting and development: Your applications use LAMP stack (Linux, Apache/Nginx, MySQL/MariaDB/PostgreSQL, PHP/Python/Perl) or similar open-source technologies.

- Containerization and orchestration: You’re implementing Docker containers, Kubernetes orchestration, or other modern deployment technologies that perform optimally on Linux.

- Budget considerations: You want to eliminate operating system licensing costs while maintaining enterprise-grade reliability.

- Security priorities: You require granular security controls, comprehensive auditing capabilities, and the ability to implement security hardening at every level.

- Automation requirements: Your DevOps practices depend on sophisticated automation and scripting capabilities native to Linux environments.

Benefits of Linux dedicated servers:

- Superior resource utilization: Linux typically requires less memory, storage, and processing power for the operating system itself, leaving more resources available for your applications.

- Extensive customization options: Choose from multiple distributions (Ubuntu, CentOS, Debian, RHEL) and customize every aspect of the system from kernel modules to service configurations.

- Robust security architecture: User permission systems, SELinux/AppArmor mandatory access controls, comprehensive firewall capabilities, and rapid security patches address vulnerabilities.

- Powerful command-line utilities: Sophisticated shell scripting and native tools for system administration enable efficient management and automation.

- Scalability advantages: Linux excels in clustering, load balancing, and distributed system architectures, making it ideal for scaling applications.

- Package management: Centralized software repositories with dependency management simplify software installation, updates, and maintenance.

- Community support: Access to extensive documentation, forums, and community resources beyond vendor-specific support channels.

- Interoperability: Strong support for industry standards and protocols ensures smooth integration with diverse systems and services.

Real-world application: Content delivery networks and video streaming services typically deploy Linux dedicated server hosting due to their exceptional network throughput capabilities, resource efficiency, and the ability to handle thousands of concurrent connections with minimal overhead.

Bare Metal Servers: Raw Performance Without Virtualization

Bare metal servers provide direct access to physical hardware without the abstraction layer of virtualization, delivering uncompromised performance for demanding applications. These high-performance machines offer dedicated resources without the overhead associated with hypervisors.

Why would you need bare metal dedicated servers?

- Performance-critical applications: Your workloads require every ounce of computing power and cannot tolerate the performance penalties of virtualization layers.

- Specialized hardware requirements: Your applications need direct access to specific hardware components like GPUs, FPGAs, or high-performance storage systems.

- Low-latency demands: Your services (like high-frequency trading platforms or real-time analytics) require microsecond-level responsiveness that virtualization might impact.

- Resource-intensive databases: You operate large-scale database systems where consistent I/O performance is crucial for transaction processing and query response times.

- Predictable performance: Your applications cannot tolerate the potential resource fluctuations that might occur in virtualized environments.

- Big data processing: You run data-intensive applications like Hadoop clusters, spark processing, or machine learning workloads that benefit from direct hardware access.

- Gaming servers: You host multiplayer gaming platforms where consistent performance and minimal latency are essential to user experience.

Benefits of bare metal servers:

- Maximum performance: Elimination of hypervisor overhead translates to faster processing, higher throughput, and reduced latency.

- Consistent resource availability: No resource contention with other virtual machines ensures predictable performance even under heavy loads.

- Full hardware control: Direct access to CPU, RAM, storage, and network interfaces without abstraction layers or resource schedulers.

- Enhanced security isolation: Physical separation from other customers’ environments provides an additional security boundary beyond software-based isolation.

- Custom hardware options: Ability to specify exact hardware configurations, including specialized components like high-performance NVMe storage or GPU accelerators.

- Optimized licensing: Some software licenses are priced per physical server rather than per core or socket, potentially reducing licensing costs.

- Simplified troubleshooting: Elimination of virtualization-related complexity makes performance analysis and problem resolution more straightforward.

- Regulatory compliance: Some compliance frameworks require physical isolation for certain types of sensitive data processing.

Real-world application: AI research teams often leverage bare metal dedicated servers with multiple GPU accelerators for machine learning model training, benefiting from the direct hardware access and elimination of virtualization overhead that could slow down computation-intensive workloads.

Dedicated Server Rentals: Flexible Options for Temporary Needs

Dedicated server rentals provide high-performance computing resources with shorter commitment periods, ideal for businesses with temporary projects, seasonal demands, or testing requirements. These solutions combine the power of dedicated hardware with the flexibility of adjustable terms.

Why would you need dedicated server rentals?

- Project-based requirements: You’re launching a time-limited project like a marketing campaign, product launch, or research initiative that requires significant but temporary computing resources.

- Seasonal traffic patterns: Your business experiences predictable traffic spikes during specific periods (holidays, enrollment seasons, annual events) that exceed your regular infrastructure capacity.

- Development and testing: You need isolated environments for development, quality assurance, or load testing that mirror production specifications without long-term commitment.

- Proof of concept implementations: You want to validate application performance on dedicated server infrastructure before committing to long-term contracts.

- Disaster recovery testing: You need temporary resources to conduct thorough disaster recovery drills without disrupting production systems.

- Migration staging: You require interim infrastructure during complex migration projects from legacy systems to new environments.

- Budget constraints: Your capital expenditure budget is limited, but operating expenses can accommodate temporary dedicated resources.

Benefits of dedicated server rentals:

- Financial flexibility: Convert capital expenditures to operational expenses while avoiding long-term financial commitments.

- Rapid deployment: Many providers offer quick provisioning for a rental dedicated server, often within hours instead of days or weeks.

- Scalable commitments: Rental terms typically range from monthly to quarterly, allowing you to extend as needed or terminate when the requirement ends.

- Performance testing: Validate application performance on various hardware configurations before making permanent infrastructure decisions.

- Capacity planning: Use temporary resources to gather performance metrics that inform long-term infrastructure planning.

- Geographic flexibility: Deploy temporary servers in different regions to test latency, compliance, or market-specific requirements.

- Hardware variety: Access to different processor generations, storage technologies, or network configurations without purchasing diverse equipment.

- Risk mitigation: Test significant changes or updates in isolated environments that mirror production without risking operational systems.

Real-world application: Event management companies often utilize dedicated server rentals for virtual conferences and online events, scaling up infrastructure for the duration of the event and its immediate aftermath, then scaling down when demand returns to normal levels.

Hybrid Dedicated Server Solutions: Combining Cloud and Dedicated Infrastructure for Seamless Server Migration

Hybrid dedicated solutions merge traditional dedicated servers with cloud hosting services to create versatile infrastructures that leverage the strengths of both approaches. This architecture provides a strategic balance between control, performance, and flexibility tailored to diverse application requirements.

Why would you need hybrid dedicated solutions?

- Workload optimization: Your application portfolio includes both consistent baseline workloads ideal for dedicated hardware and variable workloads better suited for cloud scaling.

- Cost efficiency goals: You want to maximize resource utilization by maintaining consistent workloads on dedicated hardware while handling overflow in the cloud.

- Security and compliance requirements: Some data or processes must remain on physically isolated dedicated server hardware due to regulatory requirements, while less sensitive operations can leverage cloud resources.

- Disaster recovery strategy: You need cost-effective business continuity solutions without duplicating your entire physical infrastructure.

- Geographic distribution: Your users span multiple regions, requiring both centralized processing and distributed content delivery capabilities.

- Legacy system integration: You maintain legacy applications that require dedicated hardware while developing new cloud-native services.

- Cloud migration strategy: You’re gradually transitioning to cloud infrastructure and need a phased approach rather than a “lift and shift” migration.

- Performance-sensitive applications: Your core databases or transaction processing systems require dedicated server resources, while web frontends can scale in the cloud.

Benefits of hybrid dedicated solutions:

- Optimized resource allocation: Deploy each workload on the most appropriate infrastructure based on its specific requirements and characteristics.

- Cost-effective scaling: Maintain baseline capacity on dedicated servers while handling traffic spikes and variable loads in the cloud.

- Enhanced reliability: Distribute services across multiple infrastructure types to eliminate single points of failure and improve overall resilience.

- Data sovereignty compliance: Keep sensitive data on dedicated hardware in specific geographic locations while serving content globally through cloud distribution.

- Performance predictability: Critical applications benefit from consistent dedicated resources while supporting services scale elastically.

- Flexible development environments: Development and testing can occur in cloud environments while production runs on dedicated hardware (or vice versa).

- Seamless resource connectivity: High-bandwidth, low-latency connections between dedicated and cloud resources create a unified computing environment.

- Future-proofed architecture: Adopt cloud technologies incrementally while maintaining essential dedicated server infrastructure, allowing for gradual transformation.

- Vendor diversification: Reduce dependency on any single provider by strategically distributing workloads across different infrastructure types.

Selecting the Right Dedicated Server Configuration

Choosing appropriate dedicated server specifications requires balancing performance requirements against budget constraints. Understanding the impact of various hardware components helps organizations make informed decisions that avoid both over-provisioning and performance bottlenecks.

Hardware Specifications That Matter for Your Workloads

Different workloads stress server components in distinct ways, making it essential to align hardware specifications with specific application requirements:

Web Serving Workloads:

- Moderate CPU with high single-thread performance

- Medium memory allocation (16-32GB typical)

- Fast storage for static content delivery

- High network bandwidth capacity

- Multiple IP addresses for hosting numerous domains

Database Workloads:

- High core count CPUs for query parallelization

- Large memory capacity for caching and query execution

- High-performance storage (preferably NVMe) with RAID protection

- Storage configured for appropriate write endurance

- Redundant power and network connections for reliability

Application Hosting:

- Balanced CPU with good single and multi-thread performance

- Generous memory allocation based on application requirements

- Moderate to high storage performance

- Application-appropriate RAID configuration

Big Data Processing:

- Maximum core count CPUs

- Very large memory configurations

- Extensive high-performance storage capacity

- High-speed network connectivity

- Potential for specialized accelerators (GPUs, FPGAs)

Analyzing application resource utilization patterns before server selection ensures appropriate resource allocation and prevents performance limitations.

Processor Options: From Entry-Level to Enterprise-Grade CPUs

The processor forms the computational foundation of your dedicated server, and selecting the right CPU architecture and specifications is paramount to achieving optimal performance for your specific workloads.

Entry-Level (1-2 Sockets, 4-16 Cores Total):

Entry-level dedicated server processors provide sufficient computational power for smaller workloads while maintaining reasonable power efficiency and cost-effectiveness:

- Intel Xeon E-series processors (such as the E-2300 family) offer 4-8 cores with clock speeds up to 5.1GHz and support for basic server features like ECC memory. These processors excel in single-threaded performance, making them suitable for web hosting, small business applications, and lightweight databases.

- AMD EPYC 3000-series processors provide competitive alternatives with efficient performance and modern architecture advantages. These embedded-focused processors offer up to 8 cores and 16 threads with integrated security features, making them well-suited for edge computing applications and space-constrained deployments.

Entry-level processors typically support moderate memory capacities (up to 128GB), limited PCIe lanes, and fewer memory channels compared to their higher-tier counterparts.

Mid-Range (1-2 Sockets, 16-64 Cores Total):

Mid-range dedicated server processors balance performance and efficiency for mainstream enterprise workloads:

- Intel Xeon Silver and Gold processors (from the Scalable Processor family) feature increased core counts (12-40 cores), expanded cache sizes, and support for larger memory configurations. These processors include advanced features like Intel Speed Select Technology for workload optimization and typically support dual-socket configurations for expanded processing capability.

- AMD EPYC 7003-series (Milan) processors deliver impressive core density (up to 64 cores per socket) with strong per-core performance and extensive PCIe 4.0 lane support. These processors have gained significant market share in virtualization environments due to their exceptional core-per-dollar value proposition.

Mid-range processors typically support larger memory capacities (up to 4TB per socket), more PCIe lanes for expansion, and enhanced reliability features like Advanced RAS (Reliability, Availability, and Serviceability).

High-End (2-4+ Sockets, 64-256+ Cores Total):

Enterprise processors represent the pinnacle of the dedicated server CPU technology, designed for mission-critical and highly demanding applications:

- Intel Xeon Platinum processors feature maximum core counts (up to 40 cores per socket), the highest clock speeds within the Xeon family, and support for advanced reliability features. These processors excel in environments requiring the absolute highest per-core performance and support configurations with up to eight sockets for massive computational capacity.

- AMD EPYC 9004-series (Genoa) processors offer industry-leading core counts (up to 96 cores per socket) with support for DDR5 memory and PCIe 5.0 connectivity. These processors deliver exceptional multi-threaded performance and energy efficiency for highly parallel workloads.

Enterprise processors support maximum memory capacities (often 6TB+ per socket), extensive expansion capabilities through numerous PCIe lanes, and the most sophisticated reliability features. These processors are designed for enterprise databases, extensive virtualization environments, high-performance computing (HPC), and computationally intensive applications like AI/ML workloads.

When evaluating processors, consider these critical factors:

- Core count vs. clock speed: Higher core counts benefit parallel workloads like virtualization, while higher clock speeds benefit single-threaded applications.

- Cache hierarchy: Larger cache sizes improve performance for applications with repetitive data access patterns.

- Memory channels and capacity: More memory channels provide increased bandwidth for memory-intensive applications.

- Thermal design power (TDP): Higher TDP values indicate greater power consumption and cooling requirements.

- Instruction set extensions: Specialized instructions (AVX-512, etc.) can dramatically accelerate specific operations for compatible software.

Memory Configurations: RAM Requirements for Different Applications

Server memory (RAM) directly impacts performance by determining how much data can be actively processed without resorting to significantly slower storage systems. Insufficient memory creates bottlenecks as the system constantly swaps data between RAM and storage devices.

Memory Requirements by Application Type

Different workloads have vastly different memory requirements:

Web Hosting and Content Management Systems:

- Basic websites and brochure sites: 8-16GB RAM

- Content management systems (WordPress, Drupal): 16-32GB RAM

- High-traffic publishing platforms: 32-64GB RAM or more

Database Servers:

- Small databases (<100GB): 16-32GB RAM

- Medium databases (100GB-1TB): 64-128GB RAM

- Large enterprise databases (>1TB): 256GB-2TB+ RAM

Database memory requirements are particularly dependent on the specific database technology and access patterns. In-memory databases like Redis or applications utilizing extensive caching mechanisms benefit enormously from abundant RAM resources.

Virtualization and Container Hosts:

- Small-scale virtualization (5-10 VMs): 64-128GB RAM

- Medium virtualization environments (10-50 VMs): 256-512GB RAM

- Enterprise virtualization platforms (50+ VMs): 512GB-4TB+ RAM

Virtualization hosts must accommodate not only the cumulative memory needs of all guest systems but also maintain sufficient overhead for the hypervisor’s own operations.

High-Performance Computing and AI/ML Workloads:

- Development and testing: 64-128GB RAM

- Production training environments: 256GB-1TB RAM

- Large-scale model training: 1TB-4TB+ RAM

Beyond Capacity: Memory Quality Considerations

While capacity receives primary focus, several other memory characteristics significantly impact dedicated server performance:

Memory Speed (MHz) determines data transfer rates between memory and CPU. Modern server platforms support memory speeds ranging from 2666MHz to 4800MHz+ with DDR5. Higher frequencies benefit bandwidth-sensitive applications but often come with increased latency.

Error Correction Capabilities (ECC) protect against data corruption by detecting and correcting single-bit memory errors. ECC memory is standard in servers and critical for maintaining data integrity, particularly for long-running processes where even rare memory errors become statistically significant over time.

Memory Ranks and Population affect both performance and capacity. Dual-rank memory modules often provide better performance than single-rank configurations, while fully populating all memory channels ensures maximum bandwidth utilization.

Memory Channel Configuration determines available bandwidth. Modern server processors support 6-8 memory channels per socket, and asymmetric memory population across channels can create performance bottlenecks.

Properly sized memory configurations prevent both wasteful over-provisioning and performance-limiting paging to disk.

Storage Solutions: HDD, SSD, NVMe and RAID Configurations

Storage technology has undergone revolutionary advancement in recent years, with solid-state technologies dramatically outpacing traditional mechanical drives in performance while steadily closing the gap in cost-per-gigabyte.

Storage Technologies Compared

HDD (Hard Disk Drive) Solutions:

- Capacity: Modern enterprise HDDs offer capacities from 2TB to 20TB per drive

- Performance: 150-200 IOPS (Input/Output Operations Per Second), sequential read/write speeds of 150-250MB/s

- Use cases: Archival storage, cold data, backup repositories, bulk storage where performance is secondary

- Advantages: Lowest cost per GB ($0.01-0.03/GB), established reliability metrics

- Disadvantages: Significantly slower than solid-state options, mechanical components prone to failure

SATA SSD Options:

- Capacity: Typically 480GB to 8TB per drive

- Performance: 50,000-100,000 IOPS, sequential read/write speeds of 550-600MB/s

- Use cases: Operating systems, application storage, mixed workload environments, budget-conscious performance improvements

- Advantages: 5-10x performance improvement over HDDs, no mechanical components

- Disadvantages: Limited by SATA interface bandwidth, higher cost than HDDs ($0.08-0.15/GB)

SAS SSD Options:

- Capacity: Enterprise SAS SSDs range from 800GB to 15.36TB

- Performance: 100,000-200,000 IOPS, sequential read/write speeds up to 1,200MB/s

- Use cases: Critical applications requiring high durability, database storage, mixed read/write workloads

- Advantages: Enterprise reliability features, dual-port capability for redundancy

- Disadvantages: Higher cost than SATA SSDs ($0.15-0.30/GB), still limited by interface bandwidth

NVMe Storage:

- Capacity: Consumer drives from 500GB to 4TB, enterprise options up to 30TB

- Performance: 400,000-1,000,000+ IOPS, sequential read/write speeds of 3,000-7,000MB/s

- Use cases: High-performance databases, real-time analytics, low-latency applications

- Advantages: 5-7x faster than SATA SSDs, significantly lower latency

- Disadvantages: Highest cost ($0.20-0.50/GB for enterprise drives), increased power consumption

RAID Configurations for Performance and Reliability

RAID (Redundant Array of Independent Disks) configurations combine multiple physical drives into logical units to improve performance, reliability, or both:

RAID 0 (Striping): Data is split across multiple drives, improving performance but offering no redundancy. A failure of any drive results in complete data loss. This configuration is rarely used in production environments except for temporary or easily reproducible data.

RAID 1 (Mirroring): Data is duplicated across drives, providing excellent redundancy but halving usable capacity. This configuration offers improved read performance but similar write performance to a single drive.

RAID 5 (Distributed Striping Parity): Requires a minimum of three drives and provides space-efficient redundancy through distributed parity information. This configuration can survive a single drive failure but suffers from poor performance during rebuild operations and vulnerability during reconstruction.

RAID 6 (Striping with Dual Parity): Similar to RAID 5 but uses two parity blocks, allowing survival of two simultaneous drive failures. This configuration provides better reliability than RAID 5 at the cost of additional capacity overhead and slightly reduced write performance.

RAID 10 (Mirror + Stripe): Combines mirroring and striping for both redundancy and performance. This configuration requires a minimum of four drives and can withstand multiple drive failures as long as no mirror pair loses both drives.

Enterprise environments often implement hardware RAID through dedicated RAID controllers with battery-backed cache to protect against data loss during power failures. For NVMe storage, software RAID solutions are increasingly common as these ultra-fast drives can saturate the capabilities of many hardware controllers.

Network Architecture: Bandwidth, Port Speed, and IP Allocations

Network capabilities form the critical path between your dedicated server and its users, making network architecture a fundamental consideration rather than an afterthought.

Network Interface Capabilities

Port Speed Options:

- 1Gbps: Entry-level connectivity sufficient for low-traffic websites and basic applications

- 10Gbps: Standard for modern servers, supporting most business applications and moderate traffic volumes

- 25Gbps/40Gbps: Enhanced performance for busy web services, content delivery, and multi-server environments

- 100Gbps: Ultra-high-performance connectivity for data centers, large-scale content delivery, and specialized applications

Many enterprise dedicated servers now include dual or quad-port network interfaces to support link aggregation (combining multiple physical connections) or network segregation (separating traffic types across different physical interfaces).

Bandwidth Allocation and Management

Bandwidth represents the volume of data your server can transfer over a given period, typically measured in terabytes per month:

- Small websites: 1-5TB monthly bandwidth

- Medium business applications: 10-50TB monthly bandwidth

- High-traffic services: 100TB+ monthly bandwidth

When selecting bandwidth plans, consider implementing:

- Burstable billing models that allow exceeding standard allocations during traffic spikes

- 95th percentile billing that excludes short-term traffic spikes from billing calculations

- Quality of Service (QoS) mechanisms to prioritize critical traffic during congestion

IP Address Requirements

IP resources have become increasingly scarce and valuable:

IPv4 Considerations:

- Standard allocations typically include 1-5 IPv4 addresses

- Additional addresses often incur significant costs due to IPv4 exhaustion

- IP justification processes may be required for larger allocations

IPv6 Deployment:

- Most providers offer /64 or larger IPv6 allocations at no additional cost

- Implementing IPv6 provides future-proofing and potential performance advantages

- Dual-stack configurations supporting both IPv4 and IPv6 represent the current best practice

Network Security Architecture

Network protection is essential for dedicated servers:

DDoS Protection Levels:

- Standard protection: Basic filtering that mitigates small-scale attacks (up to 10Gbps)

- Advanced protection: Intermediate mitigation for medium-scale attacks (10-100Gbps)

- Enterprise protection: Comprehensive solutions for large-scale attacks (100Gbps+)

Network Firewall Options:

- Host-based firewalls: Software firewalls running on the server itself

- Network-based firewalls: Dedicated hardware or virtualized firewall appliances

- Distributed firewalls: Cloud-based protection that filters traffic before reaching your infrastructure

For mission-critical applications, implement redundant network connections from different providers using BGP (Border Gateway Protocol) routing to ensure continuous connectivity even during provider outages.

Managed Dedicated Servers Deep Dive

Managed dedicated server offerings provide expert administration atop dedicated hardware, creating a compelling balance between control and convenience.

What “Fully Managed” Actually Includes

The term “fully managed” varies significantly between providers, making it essential to understand specific service inclusions:

Standard Inclusions in Most Fully Managed Services:

- Operating system installation and configuration

- Security patch application and maintenance

- Dedicated Server monitoring and alert response

- Basic performance optimization

- Control panel installation and management

- Backup system implementation and monitoring

- Initial security hardening and ongoing maintenance

- Technical support for server-level issues

Provider-Dependent Services:

- Application installation and configuration

- Database administration and optimization

- Content management system support

- Custom security rule implementation

- Advanced performance tuning

- Proactive capacity planning

- Code-level troubleshooting

- Migration assistance

When evaluating managed offerings, request detailed service catalogs specifying included and excluded responsibilities to avoid misaligned expectations.

Management Tiers: From Basic to White-Glove Service

Most providers structure managed services in progressive tiers with increasing levels of support:

Basic Management:

- Server monitoring and automated alerts

- Operating system updates

- Hardware-related issue resolution

- Limited support hours (typically business hours)

- Self-service tools with guidance

- Response-based rather than proactive approach

Standard Management:

- 24/7 monitoring and support availability

- Regular security patching and updates

- Performance monitoring with basic optimization

- Control panel maintenance

- Standard backup implementation

- Limited application support

Enhanced Management:

- Proactive system monitoring and intervention

- Regular security audits and hardening

- Performance optimization recommendations

- Database management and tuning

- Extended application support

- Enhanced backup and disaster recovery options

- Dedicated technical account contacts

Premium/White-Glove Management:

- Comprehensive responsibility for the dedicated server performance

- Custom monitoring and alerting configuration

- Application-level support and optimization

- Direct access to senior technical resources

- Architectural consultation and planning

- Regular environment reviews and recommendations

- Custom reporting and compliance documentation

Organizations should select management tiers aligned with internal capabilities and the criticality of hosted applications.

Technical Support SLAs and Response Times

Service Level Agreements define provider obligations and form the contractual foundation of managed services:

Critical SLA Components:

- Problem severity definitions and categorization criteria

- Response time commitments for each severity level

- Resolution time objectives or guarantees

- Escalation paths and timeframes

- Support channel availability (phone, email, ticket, chat)

- Guaranteed uptime percentages

- Compensation mechanisms for SLA violations

- Measurement methodologies and reporting frequency

Typical Response Time Frameworks:

- Critical issues (complete outage): 15-30 minutes

- Major issues (significant impact): 1-2 hours

- Standard issues (limited impact): 4-8 hours

- Minor issues (minimal impact): 1-2 business days

Organizations should evaluate SLAs against their business continuity requirements, ensuring alignment between provider capabilities and operational needs.

Security Management in Managed Environments

Security management constitutes a crucial component of managed service offerings:

Provider Security Responsibilities:

- Operating system vulnerability patching

- Perimeter security configuration and maintenance

- Firewall rule implementation as requested

- Basic intrusion detection implementation

- Security advisory notification

- Incident response coordination

- Access control management

- Security best practice implementation

Client Security Responsibilities:

- Application-level security

- User account security policies

- Content and data security

- Security requirement definition

- Compliance documentation requirements

- Final approval of security changes

Effective security management requires clear delineation of responsibilities between provider and client, documented in service agreements and periodically reviewed.

When to Choose Managed Over Self-Administered Servers

Several factors influence the decision between managed and self-administered dedicated servers:

Factors Favoring Managed Services:

- Limited internal technical expertise

- 24/7 operation requirements without matching staff coverage

- Focus on application development rather than infrastructure

- Regulatory compliance with specialized knowledge requirements

- Rapid deployment needs without setup delay

- Predictable monthly cost requirements including support

Factors Favoring Self-Administration:

- Strong existing technical team with relevant expertise

- Highly customized or unusual server configurations

- Specialized security requirements requiring direct control

- Cost sensitivity where internal resources already exist

- Unique software requirements incompatible with standard management

Many organizations implement hybrid approaches where providers handle infrastructure management while internal teams focus on application-specific requirements.

Operating System Considerations

The operating system forms the foundation of the dedicated server functionality, influencing everything from performance characteristics to application compatibility.

Windows Server Editions and Licensing Models

Microsoft offers several Windows Dedicated Server editions with distinct feature sets and licensing requirements:

Windows Dedicated Server Standard Edition:

- Supports core infrastructure roles

- Limited virtualization rights (2 VMs per license)

- Suitable for small to medium deployments

- Cost-effective for physical server deployments

Windows Dedicated Server Datacenter Edition:

- Unlimited virtualization rights

- All available Windows Server features

- Advanced storage capabilities

- Ideal for virtualization hosts or large deployments

Windows Server Essentials:

- Limited to 25 users and 50 devices

- Simplified administration experience

- Integrated Microsoft 365 capabilities

- Designed for small business environments

Licensing Models:

- Core-based licensing with 16-core minimum requirement

- Client Access Licenses (CALs) required for user/device connections

- Software Assurance options for upgrade rights

- Specific licensing for virtualized environments

When selecting Windows Dedicated Server editions, consider both current requirements and future growth to avoid licensing complications during expansion.

Popular Linux Distributions for Dedicated Server Environments

Linux distributions offer different balances of stability, support lifecycles, and feature sets:

Red Hat Enterprise Linux (RHEL):

- Commercial Linux distribution with paid support

- Long-term stability and security focus

- Extensive enterprise feature set

- Compatible with major enterprise applications

- Strong security certifications and compliance features

Ubuntu Server:

- Regular release cycle with long-term support versions

- User-friendly administration

- Extensive package availability

- Strong community support

- Good balance between cutting-edge features and stability

CentOS / Rocky Linux / AlmaLinux:

- Binary compatible with RHEL without subscription costs

- Highly stable and conservative update approach

- Popular for web hosting environments

- Strong security track record

- Extensive enterprise adoption

Debian:

- Exceptional stability and security focus

- Conservative package inclusion policy

- Minimal resource requirements

- Vast package repository

- Strong upgrade path between versions

SUSE Linux Enterprise Dedicated Server:

- Commercial distribution with enterprise support

- Strong in financial and retail sectors

- Comprehensive management tools

- Extended lifecycle support options

- Specialized for mission-critical workloads

Distribution selection should consider internal expertise, application compatibility requirements, and support needs.

OS Security Hardening Best Practices

Operating system security hardening establishes the foundation for overall dedicated server security:

Universal Hardening Principles:

- Implement the principle of least privilege

- Remove or disable unnecessary services and components

- Establish baseline configurations and deviation monitoring

- Implement regular patching procedures

- Configure centralized logging and monitoring

- Implement strong authentication mechanisms

- Establish network-level access controls

Windows-Specific Hardening:

- Implement Group Policy-based security controls

- Configure Windows Defender or third-party security tools

- Implement AppLocker or equivalent application control

- Utilize Windows Server security baselines

- Enable Enhanced Security Configuration for Internet Explorer

- Implement role-based access control

Linux-Specific Hardening:

- Configure SELinux or AppArmor mandatory access controls

- Implement TCP wrappers for service access control

- Configure kernel security modules

- Implement file integrity monitoring

- Use package verification before installation

- Configure service-specific chroot environments

Security hardening should be implemented through documented, repeatable processes to ensure consistency across dedicated server deployments.

Performance Tuning for Different Operating Systems

Operating system performance tuning optimizes resource utilization and application response:

Windows Performance Tuning:

- Configure power settings for performance mode

- Optimize page file configuration

- Adjust TCP/IP parameters for network workloads

- Configure appropriate filesystem allocation unit sizes

- Optimize background service priorities

- Implement appropriate disk I/O scheduling

- Configure memory management for workload characteristics

Linux Performance Tuning:

- Select appropriate I/O schedulers for workload types

- Configure swappiness and memory pressure settings

- Optimize filesystem parameters and journal settings

- Configure network buffer sizes and TCP parameters

- Implement appropriate process scheduling policies

- Utilize transparent huge pages appropriately

- Configure system resource limits

Performance tuning should begin with measurement and benchmarking to identify bottlenecks, followed by targeted optimizations and validation.

Control Panel Options Across Different OS Environments

Control panels simplify dedicated server administration through graphical interfaces and automation:

Windows Control Panel Options:

- Windows Admin Center: Microsoft’s browser-based management platform

- SolidCP: Multi-server Windows environment management

- Plesk for Windows: Commercial control panel with Windows optimization

- WebsitePanel: Open-source Windows web hosting management

Linux Control Panel Options:

- cPanel/WHM: Industry standard for web hosting management

- Plesk: Cross-platform commercial control panel

- DirectAdmin: Lightweight commercial control panel

- Webmin/Virtualmin: Open-source server management suite

- CyberPanel: OpenLiteSpeed-focused web hosting panel

Control Panel Selection Factors:

- Administration experience level requirements

- Licensing cost considerations

- Integration with existing tools and workflows

- Specific feature requirements

- Resource overhead tolerance

- Scalability to multiple server environments

Control panels introduce additional complexity and potential security considerations, requiring careful evaluation of benefits against drawbacks for each use case.

Bare Metal Dedicated Server Advantages

Bare metal dedicated servers provide direct hardware access without virtualization layers, offering unique advantages for specific workloads.

Applications That Demand Bare Metal Performance

Certain workloads benefit significantly from the raw performance provided by bare metal environments:

High-Performance Computing (HPC):

- Scientific simulations requiring massive computational power

- Rendering farms for 3D animation and visual effects

- Genomic sequencing and computational biology

- Weather modeling and climate research

- Financial modeling and algorithmic trading systems

I/O Intensive Operations:

- High-volume database systems with strict latency requirements

- Real-time analytics platforms processing massive data streams

- Media encoding and transcoding operations

- Content delivery networks serving large files

- Storage systems handling thousands of concurrent connections

Low-Latency Applications:

- High-frequency trading platforms

- Real-time bidding systems for programmatic advertising

- Online gaming servers where milliseconds matter

- Voice and video processing applications

- Industrial control systems with timing constraints

Software Defined Networking:

- Network function virtualization (NFV)

- Custom routing implementations

- Packet inspection and security appliances

- Software-defined WAN implementations

- Network testing and simulation environments

These applications benefit from eliminating the overhead and resource contention inherent in virtualized environments.

Hardware Customization Options

Bare metal dedicated servers typically offer extensive customization possibilities:

Processor Customization:

- Specific CPU models optimized for workload characteristics

- Clock speed vs. core count balancing

- Special instruction set requirements (AVX-512, etc.)

- NUMA configuration options for multi-socket systems

- CPU power management configuration

Memory Configuration Options:

- Specific DIMM population strategies for bandwidth optimization

- Memory speed and latency selection

- NUMA topology considerations

- Registered vs. load-reduced memory options

- ECC requirement specifications

Storage Customization:

- Custom RAID controller configuration

- Mixed storage tiers within a single server

- Specialized cache devices (Optane, etc.)

- Custom partition alignment for specific workloads

- Direct-attached storage expansion options

Network Interface Options:

- Multiple interface types (1GbE, 10GbE, 25GbE, etc.)

- Specialized NICs with offloading capabilities

- SR-IOV support for advanced virtualization

- RDMA capabilities for high-performance networking

- Custom network adapter firmware configurations

These customization options allow organizations to precisely tailor hardware to specific workload requirements, optimizing both performance and cost-effectiveness.

Virtualization on Bare Metal: Creating Your Own Cloud

Bare metal dedicated servers provide an ideal foundation for building private cloud environments:

Hypervisor Deployment Options:

- VMware ESXi for enterprise virtualization environments

- Microsoft Hyper-V for Windows-centric organizations

- KVM for open-source virtualization implementations

- Xen for specialized use cases and legacy support

- Proxmox VE for community-supported virtualization

Container Platform Foundations:

- Docker hosts for containerized application deployment

- Kubernetes clusters for container orchestration

- OpenShift for enterprise container platforms

- Rancher for multi-cluster container management

- LXD for system container implementations

Private Cloud Implementations:

- OpenStack for comprehensive cloud infrastructure

- CloudStack for alternative open-source cloud management

- oVirt for KVM-based datacenter virtualization

- VMware vCloud for enterprise private cloud.

- Microsoft Azure Stack for hybrid cloud scenarios

Building virtualization environments on bare metal offers several key advantages:

- Complete control over the physical infrastructure

- Elimination of nested virtualization penalties

- Custom security implementations at the hardware level

- Precise resource allocation without provider limitations

- Cost optimization through elimination of provider margins

Organizations increasingly implement internal cloud platforms on bare metal infrastructure to combine cloud operational models with dedicated server performance characteristics.

Industry-Specific Bare Metal Configurations

Different industries have developed specialized bare metal configurations for their unique requirements:

Financial Services:

- Ultra-low latency network adapters with kernel bypass capabilities

- CPU optimization for single-threaded performance

- High-precision timing hardware for transaction synchronization

- Memory-optimized configurations for trading algorithms

- Compliance-friendly audit logging capabilities

Media and Entertainment:

- GPU-accelerated rendering nodes

- High-bandwidth storage subsystems for raw media files

- Memory optimization for content creation software

- Network configuration for collaborative workflows

- Specialized audio processing hardware integration

Healthcare and Life Sciences:

- Memory-intensive configurations for genomic analysis

- Compliance-certified hardware for protected health information

- Accelerator cards for specific computational biology workloads

- High-reliability components for critical care systems

- Secure enclave support for protected data processing

Gaming and eSports:

- CPU optimization for game server processes

- Network tuning for minimal latency and jitter

- DDOS protection at the hardware level

- Memory configuration for large player counts

- Geographic distribution for regional performance

Research and Education:

- High core-count configurations for parallel workloads

- Specialized accelerators for scientific computing

- Large memory configurations for data analysis

- High-performance interconnects for cluster computing

- Power-efficient designs for extended computation

These specialized configurations demonstrate how bare metal servers can be optimized for specific industry vertical requirements.

Cost-Benefit Analysis: When Bare Metal Dedicated Server Makes Financial Sense

The decision to deploy bare metal infrastructure involves careful financial analysis:

Cost Advantages of Bare Metal:

- Elimination of virtualization licensing costs

- Better price-performance ratio for steady workloads

- Predictable monthly expenses without usage-based charges

- Reduced software license costs in certain scenarios (per-VM licensing)

- Elimination of “noisy neighbor” performance impacts

Additional Cost Considerations:

- Higher initial resource allocation requirements

- Potentially longer commitment periods

- Less flexibility for rapid scaling compared to cloud

- Higher migration costs when hardware refresh is required

- Additional management complexity and potential staffing costs

Financial Breakeven Analysis Factors:

- Workload stability and predictability

- Resource utilization percentage

- Contract duration and commitment terms

- Internal IT management capabilities and costs

- Application licensing model (physical vs. virtual)

Most organizations find bare metal financially advantageous for:

- Stable, high-utilization workloads running 24/7

- Performance-sensitive applications where consistent response is critical

- Large-scale deployments with predictable growth patterns

- Applications with licensing models favoring physical deployment

- Workloads requiring specialized hardware configurations unavailable in cloud environments

A comprehensive TCO (Total Cost of Ownership) analysis should include not just direct infrastructure costs but also operational expenses, management overhead, and performance impact on business outcomes.

Dedicated Server Rental Models

Flexible rental models provide alternative acquisition options for dedicated server infrastructure with different financial and operational characteristics.

Short-Term vs. Long-Term Server Rentals

Dedicated Server rental agreements offer varying commitment periods with distinct advantages:

Short-Term Rentals (1-6 months):

- Higher monthly costs but minimal commitment

- Ideal for projects with defined endpoints

- Flexibility to adjust specifications as requirements evolve

- Lower financial risk for experimental initiatives

- Ability to test configurations before longer commitments

Medium-Term Rentals (6-12 months):

- Moderate cost reduction compared to monthly rates

- Balance between commitment and flexibility

- Suitable for seasonal business needs

- Alignment with annual budgeting cycles

- Often include limited hardware refresh options

Long-Term Rentals (12-36+ months):

- Significant cost reductions through extended commitment

- Hardware refresh options typically included

- Stability for production environments

- Better support for depreciation accounting

- Enhanced customization options

Organizations frequently maintain mixed portfolios of rental durations aligned with different workload characteristics and business requirements.

Capacity Planning for Variable Workloads

Effective capacity planning balances resource availability against cost management for variable workloads:

Workload Analysis Techniques:

- Historical usage pattern analysis with seasonal adjustments

- Correlation identification between business metrics and resource needs

- Peak-to-average ratio calculation for sizing baseline capacity

- Growth trend projection for future requirements

- Benchmark testing to establish resource requirements per transaction

Capacity Strategy Options:

- Baseline capacity with burst capability through temporary resources

- Scheduled capacity variation aligned with predictable demand patterns

- Hybrid architecture with dedicated server baseline and cloud burst capacity

- Geographic distribution for follow-the-sun workload patterns

- Reserve capacity arrangements for priority activation

Monitoring and Adjustment:

- Real-time resource utilization tracking

- Predictive analytics for capacity forecasting

- Automated alerting for capacity threshold violations

- Regular capacity review and adjustment processes

- Post-event analysis for planning refinement

Effective capacity planning for variable workloads often combines permanent dedicated server infrastructure for baseline requirements with flexible capacity options for peak periods.

Contract Flexibility and Scaling Options

Rental agreements increasingly offer flexibility features addressing dynamic business needs:

Flexible Contract Provisions:

- Mid-term specification upgrade options

- Contract duration extension without renegotiation

- Early termination options with defined penalties

- Conversion paths from rental to purchase

- Equipment substitution rights for technology changes

Vertical Scaling Options:

- CPU core activation on demand

- Memory capacity expansion capability

- Storage capacity extension options

- Bandwidth burst capabilities during peak periods

- GPU or accelerator addition options

Horizontal Scaling Provisions:

- Additional dedicated server deployment with expedited provisioning

- Preferential pricing for multiple server deployments

- Cross-connection options between rental servers

- Load balancer integration for multi-server deployments

- Consistent configuration guarantee across expanded deployment

Organizations should negotiate flexibility provisions during initial contract discussions, as retroactive modifications typically command premium pricing.

Cost Structures: CAPEX vs. OPEX Considerations

Dedicated Server rental models transform traditional capital expenditure approaches into operational expense structures:

OPEX Advantages:

- Elimination of large upfront capital investment

- Predictable monthly expenses for budgeting purposes

- Simplified accounting without depreciation tracking

- Potential tax advantages depending on jurisdiction

- Improved cash flow management

- Reduced technology obsolescence risk

CAPEX Advantages:

- Lower total cost over extended periods

- Asset ownership and control

- No ongoing contractual obligations

- Potential tax advantages from depreciation

- No usage or resource constraints from provider

Financial Analysis Factors:

- Internal cost of capital and hurdle rates

- Cash flow priorities and constraints

- Financial reporting preferences

- Technology refresh cycle expectations

- Operational flexibility requirements

- Tax treatment variations between approaches

Many organizations implement hybrid approaches with foundational infrastructure as capital investment supplemented by rental resources for flexibility and specialized requirements.

Security in the Dedicated Server Environment

Comprehensive security programs address multiple protection layers from physical infrastructure to application-level controls.

Physical Security in Data Centers

Physical security forms the foundation of infrastructure protection:

Data Center Security Tiers:

- Tier 1: Basic access control and monitoring

- Tier 2: Enhanced perimeter protection and surveillance

- Tier 3: Multi-factor authentication and comprehensive monitoring

- Tier 4: Military-grade protection with highest security standards

Physical Access Control Elements:

- Multi-layer access zones with progressive security

- Biometric authentication systems

- Mantrap entries preventing tailgating

- 24/7 onsite security personnel

- Comprehensive video surveillance coverage

- Visitor management processes with escort requirements

Environmental Security Measures:

- Fire detection and suppression systems

- Water leak detection and protection

- HVAC redundancy and monitoring

- Power protection and backup systems

- Equipment mounting security

- Rack-level security with locking mechanisms

When evaluating providers, security certifications like SSAE 18/SOC 2 validate physical security implementation through independent attestation.

Network Security Infrastructure

Network security controls protect data in transit and prevent unauthorized access:

Perimeter Protection:

- Enterprise-grade firewall implementation

- Intrusion detection and prevention systems

- Border gateway protection

- Traffic filtering and inspection

- Anti-spoofing measures

- DDoS mitigation capabilities

Network Segmentation:

- VLAN implementation for traffic separation

- Private network options for inter-server communication

- Administrative network isolation

- DMZ architecture for public-facing services

- Micro-segmentation for advanced security postures

Secure Access Methods:

- VPN access for remote administration

- Encrypted management protocols (SSH, HTTPS)

- Jump server architecture for privileged access

- Network access control lists

- Bastion host implementation

- Just-in-time access provisioning

Traffic Monitoring:

- Netflow analysis for traffic pattern monitoring

- Deep packet inspection capabilities

- Bandwidth utilization monitoring